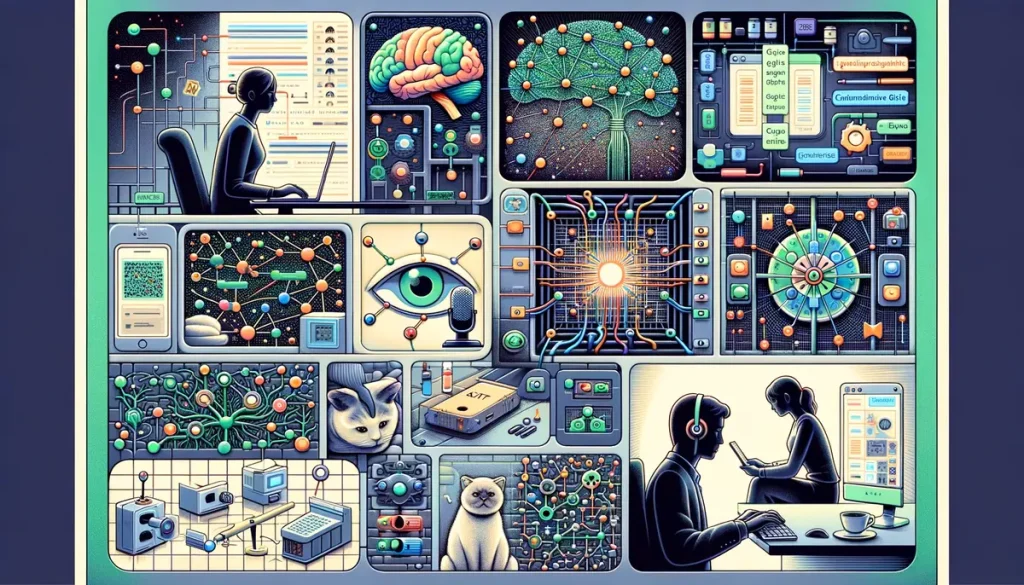

Long Short-Term Memory (LSTM) networks have emerged as a powerful solution to the limitations of conventional Recurrent Neural Networks (RNNs). Unlike RNNs, which struggle with retaining information over long sequences, Long Short-Term Memory networks are designed to handle long-term dependencies more effectively. This blog post explores the architecture, functionalities, and real-world applications of LSTM networks, shedding light on their significance in artificial intelligence and machine learning.

Read More: The 12 Best NLG Software Of 2024

Understanding Long Short-Term Memory Networks

Applications of LSTM Networks

LSTM in Language Modeling

One of the prominent applications of Long Short-Term Memory networks is in language modeling, where they demonstrate exceptional proficiency in generating coherent text based on input sequences. This capability is invaluable for applications such as predictive text and auto-completion, where Long Short-Term Memory networks can predict the next word or phrase in a sequence with remarkable accuracy.

By analyzing patterns and dependencies within the input text, LSTM networks empower language modeling tools to provide intuitive and contextually relevant suggestions to users, enhancing their overall typing experience.

LSTM in Image Processing

In image processing tasks, Long Short-Term Memory networks play a crucial role in generating descriptive captions for images, a process known as image captioning. By analyzing the visual features of input images and correlating them with textual descriptions, Long Short-Term Memory networks can generate accurate and contextually relevant captions, enhancing accessibility and understanding for visually impaired individuals.

Moreover, LSTM-based image captioning systems have applications in content indexing, image retrieval, and multimedia content generation, contributing to advancements in computer vision and artificial intelligence.

Long Short-Term Memory in Speech Recognition

Speech recognition systems leverage LSTM networks’ ability to process sequential audio data, enabling accurate transcription and interpretation of spoken language. By analyzing the temporal patterns and phonetic characteristics of speech signals, Long Short-Term Memory networks can effectively convert spoken words into text, facilitating applications such as voice commands, dictation, and automated transcription services.

With advancements in deep learning and neural network architectures, LSTM-based speech recognition systems continue to improve in accuracy and robustness, paving the way for enhanced human-computer interaction and accessibility.

LSTM in Language Translation

Language translation represents another critical application domain for Long Short-Term Memory networks, where they facilitate seamless communication across languages by accurately translating phrases and sentences. LSTM-based translation systems employ sophisticated algorithms to analyze and understand the semantic and syntactic structures of input text in one language and generate corresponding translations in another language.

By capturing the nuances of language and context, Long Short-Term Memory networks enable accurate and contextually relevant translations, bridging linguistic barriers and fostering cross-cultural communication. From online translation services to language learning platforms, LSTM-based translation systems play a pivotal role in facilitating global communication and collaboration.

Drawbacks of Using LSTM Networks

- Despite their advantages, Long Short-Term Memory networks have some limitations and drawbacks.

- Challenges such as vanishing gradients, resource intensiveness, and susceptibility to overfitting can impact the performance and efficiency of LSTM-based models.

- Addressing these drawbacks requires ongoing research and development efforts aimed at improving LSTM architectures and training methodologies.

- Nevertheless, the benefits of Long Short-Term Memory networks outweigh their limitations, making them a valuable tool in the field of artificial intelligence and machine learning.

Conclusion

Long Short Term Memory (LSTM) networks represent a significant advancement in the realm of sequential data processing. By addressing the limitations of conventional Recurrent Neural Networks (RNNs), LSTM networks enable more effective modeling of long-term dependencies and sequential patterns.

With applications spanning language modeling, image processing, speech recognition, and more, LSTM networks continue to drive innovation and advancement in artificial intelligence. As researchers and developers continue to explore and refine Long Short-Term Memory architectures, the potential for leveraging these networks in various domains is limitless. Harnessing the power of LSTM networks opens doors to new possibilities and opportunities in the field of machine learning and data science.