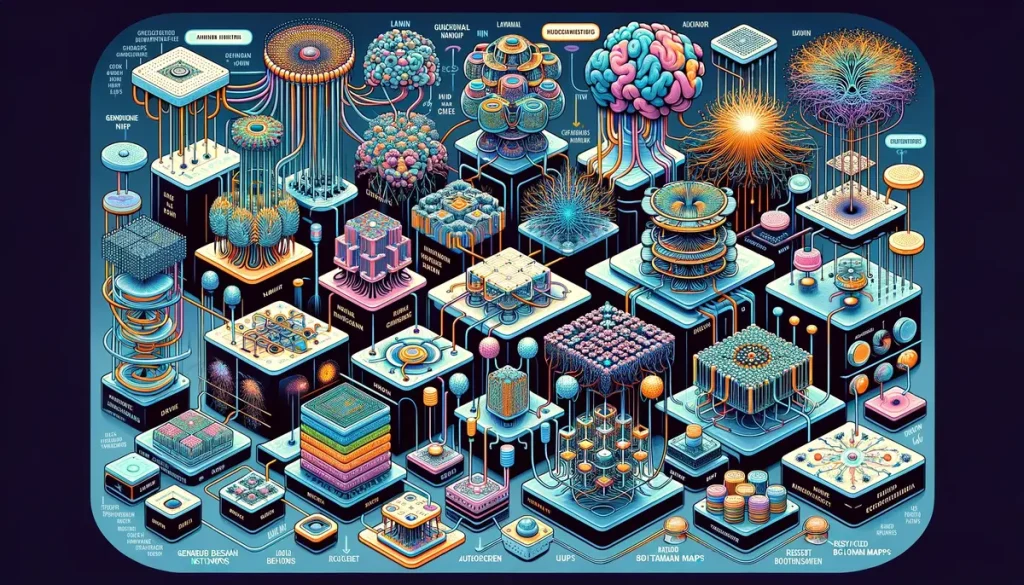

Have you ever wondered how artificial neural networks are revolutionizing unsupervised learning and data analysis? Curious about the groundbreaking innovation behind Restricted Boltzmann Machines (RBMs)? Join us as we explore the depths of RBMs, exploring their origins, applications, and transformative impact across diverse domains.

Read More: What Is Transfer Learning? Exploring the Popular Deep Learning Approach.

Understanding Boltzmann Machines

Boltzmann Machines, the precursor to the Restricted Boltzmann Machine, lay the groundwork for understanding the intricacies of neural network architectures. These interconnected networks, devoid of output layers, exhibit a unique synergy between visible and hidden layers. The Boltzmann distribution, synonymous with statistical mechanics, serves as the guiding principle behind these machines, encapsulating the essence of energy-based models.

The Evolution to Restricted Boltzmann Machines (RBMs)

Restricted Boltzmann Machines, a refined iteration of Boltzmann Machines, introduce a pivotal constraint—restriction of connections between similar layer types. This strategic limitation fosters a conducive environment for learning compressed representations of input data, thus propelling Restricted Boltzmann Machines to the forefront of unsupervised learning paradigms. Through meticulous adjustments of weights and nuanced error calculations, RBMs epitomize the essence of precision in predictive analytics.

Conclusion

Restricted Boltzmann Machines emerge as a cornerstone of modern machine learning, reshaping the contours of unsupervised learning and predictive analytics. As technology continues to evolve, the potential of Restricted Boltzmann Machines remains boundless, offering a glimpse into a future where data-driven insights pave the way for transformative innovations. Embrace the power of Restricted Boltzmann Machines, and embark on a journey towards enhanced predictive capabilities and data-driven decision-making.